High-Performance Computing Working Group at the IMAG/MSM

Working Group Lead:Ravi Radhakrishnan

Email: rradhak@seas.upenn.edu

Synopsis:

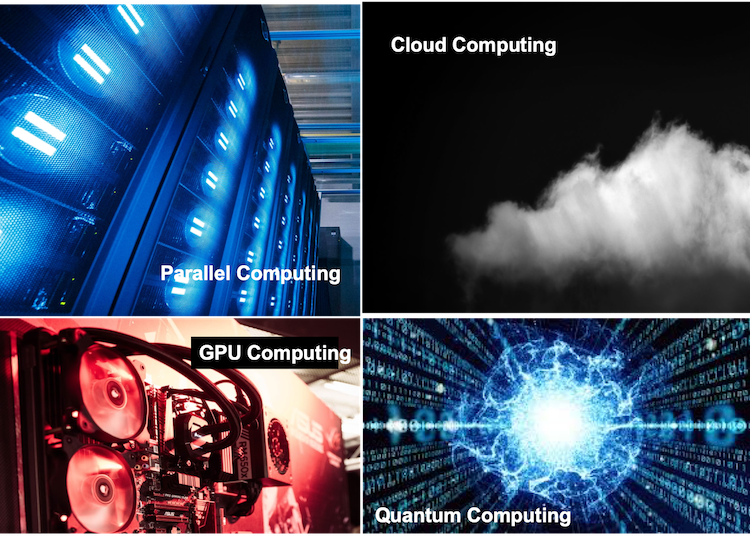

Research problems in the domain of biological and biomedical sciences and engineering often span multiple time and length-scales from the molecular to the organ/organism, owing to the complexity of information transfer underlying biological mechanisms. Multiscale modeling (MSM) and high-performance scientific computing (HPC) have emerged as indispensable tools for tackling such complex problems. However, a paradigm shift in training is now necessary to leverage the rapid advances, and emerging paradigms in HPC (see Figure 1) --- GPU, cloud, exascale supercomputing, quantum computing --- that will define the 21stcentury.

HPC modulaties: parallel computing, cloud computing, GPU computing, quantum computing

Figure 1: HPC paradigms – current and future

Reports and Review Articles:

The HPC technology is rapidly evolving and is synergistic yet complementary to the development of scientific computing. To help scholars get started, we recommend the following Reports and review articles and resources as starting points.

Perspectives: Challenges, and Opportunities for integrating Machine Learning and Multi-scale Modeling in the Biological, Biomedical, and Behavioral Sciences

Mark Alber, Adrian Buganza Tepole, William R. Cannon, Suvranu De, Salvador Dura-Bernal, Krishna Garikipati, George Karniadakis, William W. Lytton, Paris Perdikaris, Linda Petzold & Ellen Kuhl

NPJ Digital Medicine, volume 2, Article number: 115 (2019)

DOI:10.1038/s41746-019-0193-y

Link to Archives: https://arxiv.org/pdf/1910.01258.pdf

The Landscape of Parallel Computing Research: A View from Berkeley

Krste Asanović, Ras Bodik, Bryan Christopher Catanzaro, Joseph James Gebis, Parry Husbands, Kurt Keutzer, David A. Patterson, William Lester Plishker, John Shalf, Samuel Webb Williams and Katherine A. Yelick

URL: http://www2.eecs.berkeley.edu/Pubs/TechRpts/2006/EECS-2006-183.pdf

GPU computing for systems biology

Lorenzo Dematté Davide Prandi,

Brief Bioinform (2010) 11 (3): 323-333.

DOI: http://dx.doi.org/10.1093/bib/bbq006

A scoping review of cloud computing in healthcare

Lena Griebel, Hans-Ulrich Prokosch, Felix Köpcke, Dennis Toddenroth, Jan Christoph, Ines Leb, Igor Engel & Martin Sedlmayr

DOI: https://doi.org/10.1186/s12911-015-0145-7

Quantum computing

Andrew Steane

Reports on Progress in Physics, Volume 61, Number 2

DOI: https://doi.org/10.1088/0034-4885/61/2/002

MSM/IMAG Webinars and Workshops related to HPC

1. Life Sciences on High Performance Computing (HPC) Environment(2013)

2. Bridging Multiple Scales in Modeling Targeted Drug Nanocarrier Delivery(2014)

3. IMAG Multiscale Modeling Funding Opportunity - Informational Webinar(2015)

4. Low resolution models for mesoscale structure and thermodynamics of soft materials(2015)

5. A Summary of the Working Group Break-Out Discussion (2015)

6. A Summary of the Working Group Break-Out Discussion (2017)

7. Machine Learning and Multiscale Modeling Workshop at IMAG/MSM (2019)

Both AI/ML and MSM are integral to HPC, and IMAG has played a leadership role in discussing the emerging frontiers in the synergy between ML/AI and MSM. A summary of the 2019 ML-MSM symposium organized by MSM/IMAG can be found below, including video recordings of the webcast. A perspectives article published in 2019 on this meeting can be found under reports and review articles, below.

URL: https://www.imagwiki.nibib.nih.gov/content/2019-ml-msm-all-meeting-information

8. Advisory Committee to the NIH Director (ACD*) Workshop (2019)

Report from NIH data science infrastructure and NIH AI working group

URL: https://acd.od.nih.gov/documents/agendas/12132019agenda.pdf

ACD meeting is videocast: https://videocast.nih.gov/

*Background: The ACD functions a bit like an Institute Council for all of NIH and meets twice a year. ACD does not review grants, but they do review trans-NIH concept clearances.

The purpose of this working group is to initiate discussion, foster collaboration, and provide training resources for utilization of HPC methods and platforms:

- Computational resources, including high-performance computing

- Integrated multiscale modeling (MSM) and artificial intelligence (AI) based simulations on emerging computer architectures (Figure 1)

- Computational algorithms, libraries, tool-kits, and software

- Infrastructure and strategies to handle big-data problems

- Webinars on High Performance Computing Topics

- Workshops on HPC

- Collaborations and training for HPC

High-performance computing (HPC) broadly involves the use of new architectures (such as GPU computing), computing in distributed systems, cloud-based computing, and computing in parallel to massively parallel platforms or extreme hardware architectures for running computational models. The term applies especially to systems that function with large floating-point operations per second (teraflops 10^12, petaflops 10^15, exaflops 10^18) regime or systems which require extensive memory. HPC has remained central to applications in science, engineering, and medicine. Challenges for researchers utilizing HPC platforms and infrastructure range from understanding emerging new platforms to optimizing algorithms in massively parallel architectures to efficient access and handling of data at a large scale. The high-performance computing working group is focused on addressing contemporary HPC related issues faced by biomedical researchers.

Training

HPC Virtual Workshops

https://cvw.cac.cornell.edu/topics

Software Carpentry

https://software-carpentry.org/lessons/

Resources and Links:

The Extreme Science and Engineering Discovery Environment (XSEDE)

PEARC (Practice & Experience in Advanced Research Computing) conference (Academia focused)

Supercomputing (SC) conference (Industry Focused)

National Science Foundation Advanced Cyber Infrastructure

https://www.nsf.gov/div/index.jsp?div=OAC

Multiscale Modeling and Simulation: A SIAM Interdisciplinary Journal https://www.siam.org/journals/mms.php

PLOS Computational Biology

http://journals.plos.org/ploscompbiol/

HPC Intel

https://www.intel.com/content/www/us/en/high-performance-computing/overview.html

HPC IBM

https://www.ibm.com/cloud-computing/xx-en/solutions/high-performance-computing-cloud/

HPC Google

https://cloud.google.com/solutions/hpc/

HPC Amazon

Quantum Computing on Amazon

https://aws.amazon.com/braket/

Topic: Computing Hardware Resources

- Need for access to computing resources for MSM needs

- GPUs and other emerging computer hardware for MSM

- MSM and AI on the Cloud

Topic: Computational Algorithms

- Tool-kits, code, data for MS/AI on HPC platforms

- Optimized libraries on HPC platforms

- Forums for HPC discussion

Topic: Collaborate, define the needs of the community

- 20% of all computing time on NSF supercomputers and 10% of time on DOE supercomputers is used by NIH funded PIs. Would it benefit to coordinate the access to supercomputers, especially for small to medium sized or beginning users?

- Is there benefit of organizing focused workshops to discuss and exchange ideas related to HPC and MSM?